sciware

Sciware

Flatiron Summer Workshops

https://sciware.flatironinstitute.org/40_SummerIntro

- Schedule

- July 2: Summer Sciware 4

- All 10 AM - noon in the IDA Auditorium (162 Fifth Ave, 2nd Floor)

Today’s agenda

- Cluster overview, access

- Modules and software

- VS code remote

- Python environments and Jupyter kernels

- Slurm and parallelization

- Filesystems and storage

Cluster overview

<img height=80% width=80% margin=”10px auto” class=”plain” src=”assets/cluster/overview_1.png”>

Rusty

- 4 login nodes (

ssh rusty) - ~140k CPU cores, ~1400 nodes, 1.8PB RAM (~1TB/node)

- 150 H200, 240 H100, 288 A100 GPUs

https://wiki.flatironinstitute.org/SCC/Hardware/Rusty

Popeye

- Completely separate cluster (separate storage, job queue)

- ~42k CPU cores, ~800 nodes, 700TB RAM (~1TB/node)

https://wiki.flatironinstitute.org/SCC/Hardware/Popeye

Remote access

- Setup your cluster account

- Mentor requests account on FIDO, provides PIN

- Set your password on FIDO

- Get a one-time verification code on FIDO

ssh -p 61022 USERNAME@gateway.flatironinstitute.org- Setup google-authenticator

- From gateway:

ssh rusty(orssh popeye)

https://wiki.flatironinstitute.org/SCC/RemoteConnect

Modules & software

Overview

- Most software you’ll use on the cluster will either be:

- In a module we provide

- Downloaded/built/installed by you (often using modules)

- By default you only see the base system software (Rocky8)

module avail: Core

- See what’s available:

module avail

------------- Core --------------

gcc/10.5.0

gcc/11.4.0 (D)

gcc/12.2.0

openblas/single-0.3.26 (S,L)

openblas/threaded-0.3.26 (S,D)

python/3.10.13 (D)

python/3.11.7

...

D: default version (also used to build other packages)L: currently loadedS: sticky

module load or ml

- Load modules with

module loadorml NAME[/VERSION] ...> python -V Python 3.6.8 > ml python > python -V Python 3.10.13 - Remove with

module unload NAMEorml -NAME - Can use partial versions, and also switch

> module load python/3.11 The following have been reloaded: (don't be alarmed) > python -V

module show

- Try loading some modules

- Find some command one of these modules provides

- (Hint: look at

module show MODULE)

Other module commands

module listto see what you’ve loadedmodule resetto reset to default modulesmodule spider MODULEto search for a module or package> module spider numpy > module spider numpy/1.26.4- Some modules are “hidden” behind other modules:

module spider mpi4py/3.1.5

- Some modules are “hidden” behind other modules:

module releases

- We keep old sets of modules, and regularly release new ones

- Try to use the default when possible

modules/2.3-20240529 (S,L,D)

modules/2.4-beta2 (S)

Python environments

uv(also inmodules/2.4)- Virtual environments

Python packages: venv

module load pythonhas a lot of packages built-in (checkpip list)- If you need something more, create a virtual environment:

module load python

python3 -m venv --system-site-packages ~/myvenv

source ~/myvenv/bin/activate

pip install ...

- Repeat the

mlandsource activateto return in a new shell

Too much typing

Put common sets of modules in a script

# File: ~/mymods

module reset

module load gcc python hdf5 git

source ~/myvenv/bin/activate

And “source” it when needed:

source ~/mymods

- Avoid putting module loads in

~/.bashrc

Other software

If you need something not in the base system, modules, or pip:

- Ask your mentor!

- Download and install it yourself

- Many packages provide install instructions

- Load modules to find dependencies

- Ask for help! #code-help, #sciware, #scicomp, scicomp@

Jupyter

- JupyterHub: https://jupyter.flatironinstitute.org/

- Login and start a server

- Default settings are fine: JupyterLab, 1 core

- To use an environment you need to create a kernel

- Create a kernel with the environment

# setup your environment ml python ... source ~/myvenv/bin/activate # capture it into a new kernel ml jupyter-kernels python -m make-custom-kernel mykernel - Reload jupyterhub and “mykernel” will show up providing the same environment

- Create a kernel with the environment

VS code remote

- In JupyterLab: File, Hub control panel, Stop My Server

Break

Survey

Running Jobs on the FI Cluster

Slurm and Parallelism

How to run jobs efficiently on Flatiron’s clusters

Slurm

- How do you share a set of computational resources among cycle-hungry scientists?

- With a job scheduler! Also known as a queue system

- Flatiron uses Slurm to schedule jobs

</img>

</img>

Slurm

- Wide adoption at universities and HPC centers: same commands work on most clusters (some details are different)

- Run any of these Slurm commands from a command line on rusty or popeye (or your workstation)

https://wiki.flatironinstitute.org/SCC/Software/Slurm

Batch file

Write a batch file called myjob.sbatch that specifies the resources needed.

Submitting a job

- Submit the job to the queue with

sbatch myjob.sbatch:

Submitted batch job 1234567 - Check the status with:

squeue --meorsqueue -j 1234567

Where is my output?

- By default, anything printed to

stdoutorstderrends up inslurm-<jobid>.outin your current directory - Can add

#SBATCH -o myoutput.log-e myerror.log

Loading environments

Good practice is to load the modules you need in the script:

#!/bin/bash

#SBATCH ...

module reset

module load gcc python

source ~/myvenv/bin/activate

# (or: source ~/mymods)

python3 myscript.py

Running Jobs in Parallel

- You’ve written a script to post-process a simulation output

- Have 10–10000 outputs to process

> ls ~/ceph/myproj data1.hdf5 data2.hdf5 data3.hdf5 [...] - Each file can be processed independently

- This pattern of independent parallel jobs is known as “embarrassingly parallel”

- Running 1000 independent jobs won’t work: limits on how many jobs can run

What about multiple things?

Let’s say we have 10 files, each using 1 GB and 1 CPU

Slurm Tip: Estimating Resource Requirements

- Jobs don’t necessarily run in order

- Specifying the smallest set of resources for your job will help it run sooner

- But don’t short yourself!

- Memory requirements can be hard to assess, especially if you’re running someone else’s code

Slurm Tip: Estimating Resource Requirements

- Guess based on your knowledge of the program. Think about the sizes of big arrays and any files being read

- Run a test job

- While the job is running, check

squeue,sshto the node, and runhtop - Check the actual usage of the test job with:

seff <jobid>Job Wall-clock time: how long it took in “real world” time; corresponds to#SBATCH -tMemory Utilized: maximum amount of memory used; corresponds to#SBATCH --mem

Slurm Tip: Choosing a Partition (CPUs)

- Use

-p gento submit small/test jobs,-p ccXfor real jobsgenhas small limits and higher priority

- The center and general partitions (

ccXandgen) always allocate whole nodes- All cores, all memory, reserved for you to make use of

- If your job doesn’t use a whole node, you can use the

genxpartition (allows multiple jobs per node) - Or run multiple things in parallel…

Running Jobs in Parallel

- What if we have 10000 things?

- What if tasks take a variable amount of time?

- The single-job approach allocates resources until the longest one finishes

- What if one task fails?

- Resubmitting requires a manual post-mortem

- disBatch (

module load disBatch)- Developed here at Flatiron: https://github.com/flatironinstitute/disBatch

disBatch

- Write a “task file” with one command-line command per line:

# File: jobs.disbatch python3 myjob.py data1.hdf5 >& data1.log python3 myjob.py data2.hdf5 >& data2.log python3 myjob.py data3.hdf5 >& data3.log - Can also use loops:

#DISBATCH REPEAT 13 python3 myjob.py data${DISBATCH_REPEAT_INDEX}.hdf5 >& data${DISBATCH_REPEAT_INDEX}.log - Submit a Slurm job, invoking the

disBatchexecutable with the task file as an argument:

sbatch -p genx -n 2 -t 0-2 disBatch jobs.disbatch

disBatch

disBatch

- When the job runs, it will write a

jobs.disbatch_*_status.txtfile, one line per task - Only limited output is captured: if you need logs, add

>&redirection for each task - Resubmit any jobs that failed with:

disBatch -r jobs.disbatch_*_status.txt -R

0 1 -1 worker032 8016 0 10.0486528873 1458660919.78 1458660929.83 0 "" 0 "" '...'

1 2 -1 worker032 8017 0 10.0486528873 1458660919.78 1458660929.83 0 "" 0 "" '...'

Slurm Tip: Tasks and threads

- For flexibility across nodes, prefer

-n/--ntasksto specify total tasks (not-N/--nodes+--ntasks-per-nodes) - If each task needs more CPUs, use

-c:#SBATCH --cpus-per-task=4 # number of threads per task export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK - Total cores is

-c*-n

GPUs

- GPU nodes are not exclusive (like genx), so you should specify:

-p gpu- Number of tasks:

-n1 - Number of cores:

--cpus-per-task=1or--cpus-per-gpu=1 - Amount of memory:

--mem=16Gor--mem-per-gpu=16G - Number of GPUs:

--gpus=or--gpus-per-task= - Acceptable GPU types:

-C v100|a100|h100|h200

Summary of Parallel Jobs

- Independent parallel jobs are a common pattern in scientific computing (parameter grid, analysis of multiple outputs, etc.)

- If you need more communication, check out MPI (e.g., mpi4py)

</img>

</img>

File Systems

https://wiki.flatironinstitute.org/SCC/Hardware/Storage

Home Directory

- Every user has a "home" directory at

/mnt/home/USERNAME(or~) - Home directory is shared on all nodes (rusty, workstations, gateway)

- Popeye (SDSC) has the same structure, but it's a different home directory

Home Directory

Your home directory is for code, notes, and documentation.

It is NOT for:

- Large data sets downloaded from other sites

- Intermediate files generated and then deleted during the course of a computation

- Large output files

You are limited to 900,000 files and 450 GB (if you go beyond this you will not be able to log in)

Backups (aka snapshots)

.snapshots directory like this:

ls .snapshots

cp -a .snapshots/@GMT-2021.09.13-10.00.55/lost_file lost_file.restored

.snapshotsis a special invisible directory and won't autocomplete- Snapshots happen once a day and are kept for 3-4 weeks

- There are separate long-term backups of home if needed (years)

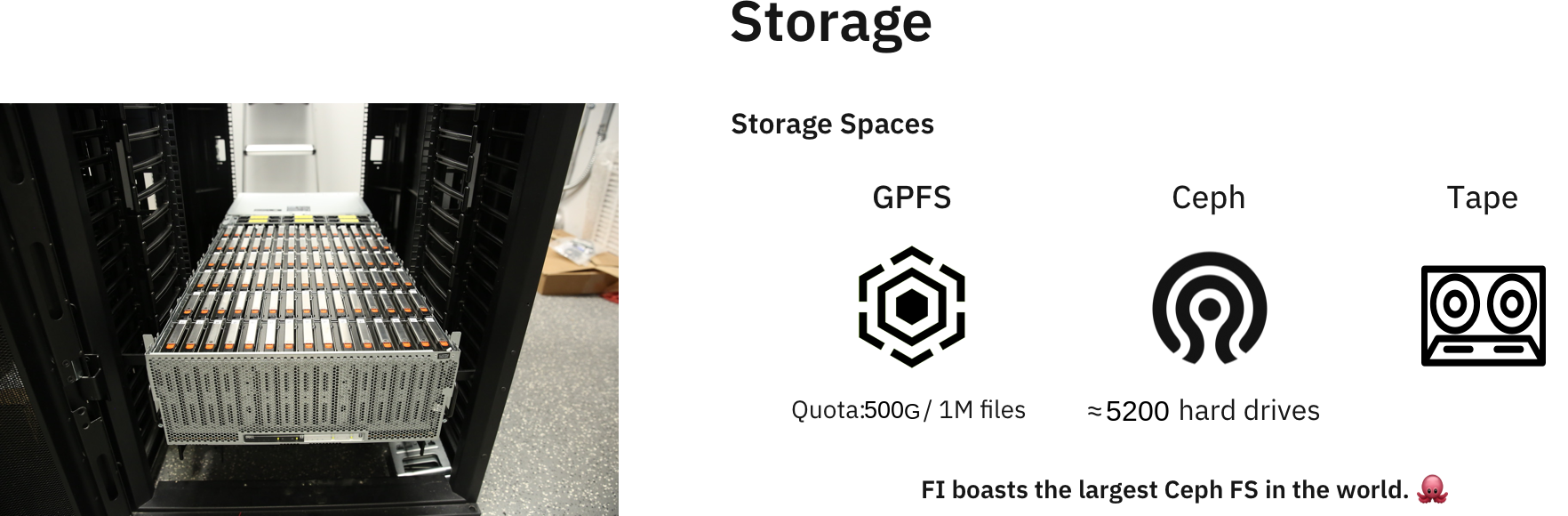

Ceph

- Pronounced as “sef”

- Rusty:

/mnt/ceph/users/USERNAME - Popeye:

/mnt/sdceph/users/USERNAME - For large, high-bandwidth data storage

- Not a “scratch” filesystem: no automatic deletion

- No backups*

- Do not put ≥ 1000 files in a directory

* .snap is coming soon

Summary: Persistent storage

Monitoring Usage: /mnt/home

View a usage summary:

module load fi-utils

fi-quota

To track down large files or file counts use:

ncdu -x --show-itemcount ~

Monitoring Usage: /mnt/ceph

- Don’t use

duorncdu, it’s slow - Use can use

ls -lh!

module load fi-utils

cephdu

Local Scratch

- Each node as a

/tmp(or/scratch) disk of ≥ 1 TB - For extremely fast access to smaller data, you can use the memory on each node under

/dev/shm(uses memory) - Both of these directories are cleaned up after each job

- Make sure you copy any important data/results over to

cephor yourhome

- Make sure you copy any important data/results over to

Summary: Temporary storage

Planned usage

- Everyone has planned usage estimates (entered annually)

- Storage, CPU time (core-hours), GPU time (GPU-hours), for each cluster (rusty, popeye)

- Monitor usage on FIDO, or:

module load fi-utils

fi-usage

Survey

Questions & Help

<img height=80% width=80% src=”assets/cluster/help.gif”>